How Developers Survive Through Learning

Don't study by following the crowd — how to take ownership of your learning as a developer

In this post, I want to talk about something inseparable from being a developer: studying. Every profession requires ongoing learning, of course, but the IT industry changes faster than most — which means developers have no choice but to keep studying until they retire.

Developers are on the front lines of technology, which makes them especially sensitive to change. Some changes are passing fads; others are foundational knowledge that’ll serve you for the next 20 years. But no matter how much we study, the sheer volume of new technology makes it impossible to learn everything. So we have to evaluate whether a technology is a temporary trend, a lasting skill, or something we need right now — and choose accordingly.

In this post, I want to share the criteria I’ve used over my four years navigating the chaotic web frontend ecosystem — how I’ve chosen what to study and how I’ve gone about studying it. This is entirely my subjective opinion. It’s not the definitive answer, and it might not work for you at all. Take it as a “here’s how one person does it” kind of read.

Why We Can’t Afford to Slack on Learning

Before I explain my approach, let me first address the question: “Why can’t developers afford to neglect learning?” The simple answer is “you’ll fall behind in a fast-changing industry,” but that’s not a very satisfying response. It feels results-oriented — like saying “study hard or you’ll be left behind.” That doesn’t answer the “why” and doesn’t really motivate anyone.

So let me go a bit deeper.

Technology Advances Faster Than You Think

Even with just four years of experience, I’ve frequently thought “how does everything change so fast?” The changes ranged from minor (“oh, something new and nice”) to paradigm-shifting (“wait, what even is this?”).

If a four-year developer like me feels this way, I can only imagine what it’s like for veterans with ten or more years of experience. And since the pace of technological advancement keeps accelerating, it’s only going to get faster.

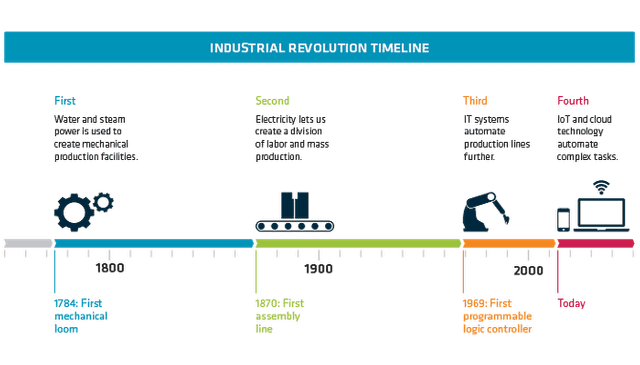

The pace of human technological advancement keeps accelerating.

The pace of human technological advancement keeps accelerating.

The image above shows when each industrial revolution occurred. From the first in 1784 to the fourth in the present day, the gaps keep getting shorter. Human technological progress doesn’t grow linearly — it grows exponentially.

Using industrial revolutions as examples might feel too macro to relate to. But the small changes engineers notice day to day accumulate across the industry, creating synergies that eventually break through critical thresholds. An engineer’s ability to sense and keep up with these changes shouldn’t be underestimated.

In other words, here’s what “falling behind” actually means. Imagine a master farmer from the Silla dynasty in ancient Korea. That person could still make a living with their skills 1,000 years later in the Joseon dynasty — despite a millennium-wide gap. By contrast, the human “computers” who performed calculations at the US Department of Defense in the 1970s hold a job title that no longer exists today.

This pace of change will only accelerate. That’s why people say “study or get left behind.” The shift from physical servers to cloud computing might seem like a minor detail, but if you brush it off and move on, within just two years you’ll find it increasingly difficult to follow the new paradigms that build on top of it.

Changes in IT Have Outsized Impact

Of course, knowledge in fields outside IT also evolves over time. But the IT industry doesn’t just change quickly — it frequently undergoes changes so fundamental that entire paradigms get flipped upside down.

In physics, for instance, when an existing theory is disproven and a new one is proposed, it can be significant enough to rewrite textbooks worldwide. But such events are rare. A famous example is Einstein’s theory of relativity, which proposed that time flows differently depending on the observer’s state — overturning thousands of years of assuming time was absolute.

In law, new legislation requires studying updated statutes and reviewing relevant precedents. This happens frequently as governments amend laws large and small, but these changes rarely overturn the fundamental paradigms that existing laws operate under. Proposing something that radical is extremely difficult.

In IT, however, changes that are both rapid and paradigm-shifting happen regularly. A few examples: the shift from jQuery to AngularJS, from MVC to Flux, the introduction of Docker containers, the emergence of serverless architecture. Though I didn’t experience it firsthand, I imagine it was similar when AWS first launched cloud computing services.

In IT, there have been many cases where you need to completely abandon existing paradigms and focus entirely on something new to understand what’s happening. Each time, the ecosystem underwent significant transformation — affecting not just developers but everyone’s daily lives.

You might wonder “how do these things affect everyday life?” Here are a few examples.

Thanks to these changes, many companies no longer buy physical servers and host them in data centers. They use cloud computing instead, which reduces the resources needed for server management and enables flexible traffic handling — significantly lowering the operational burden.

Today, knowing just JavaScript lets you build web clients, mobile apps, desktop applications, and servers. This means developers don’t need to learn multiple languages to build the service they want.

And by paying a modest fee to a cloud computing provider, you can set up and manage backend infrastructure with just a few clicks — making it possible for a single developer to operate a massive system.

These changes have lowered the barrier to programming dramatically compared to the past, ushering in an era where anyone with an idea can take a shot at an IT venture. The result? Tech giants like Google and Facebook that directly impact all of our lives.

How I Study

I’m one of those developers scrambling not to fall behind in this rapid current of technology, so naturally I study regularly. But in this post, rather than stating the obvious fact that you should study, I wanted to share how I actually go about it.

I’ve heard many developers, aspiring developers, and career changers express difficulty with studying. Their challenge isn’t usually the technical difficulty itself — it’s “I don’t know where to start.”

This is perfectly understandable in an age when information is flooding in from every direction. Before you even begin studying development, there are too many choices to make. Should you learn a language and framework first, or start with computer science fundamentals? Which language? React or Vue? The list of decisions is overwhelming before you’ve even written a line of code.

And since there’s no single right answer, asking developers around you or posting in communities will likely yield different responses from different people.

I personally think our tendency toward this kind of paralysis is partly shaped by cultural conditioning. Many of us grew up in educational systems where we studied what we were told to study rather than choosing our own path. Even when choosing a college major, many people pick based on their test scores rather than genuine interest.

Standardized tests matter, but failing them doesn't ruin your life.

Standardized tests matter, but failing them doesn't ruin your life.I nearly didn't get into college because I spent my time goofing off — and I turned out fine.

After college, you follow the crowd — getting certifications, scoring well on standardized tests, doing internships, preparing for job applications. In this process, it’s honestly hard to pause and ask “what do I actually want?” (You’re too busy trying not to fall behind everyone else.)

Given this background, when someone suddenly tells you “you’re an adult now, go figure out what you want to learn on your own,” it’s only natural that it feels unfamiliar.

So here’s what I’d suggest: set aside the question of what to study for a moment. There’s no good answer because no matter where you start, there’s too much to learn. What matters isn’t deciding what to study — it’s deciding what to build.

Decide What You Want to Build First

When someone tells me they don’t know what to study, the first question I always ask is:

So, have you thought about what you want to build?

Surprisingly, 9 out of 10 people answer “not really.” They’re trying to start studying development without knowing what they want to create. This approach leads to two problems:

- Without a clear goal, you have no idea when you’re “done” studying.

- You can’t immediately apply what you learn, so studying isn’t fun.

The first problem is the more serious one. When there’s no goal, or just a vague one like “I want to be good at development,” no amount of studying will tell you where the finish line is. In these cases, people often start with passion but gradually burn out.

Studying is really about how well you can focus. It’s better to finish one subject in a month and move on to the next than to spread yourself across 100 subjects over ten years. If you don’t know where to start, you’ll dabble in this and that, hit a wall, and give up.

The second problem is essentially an extension of the first. It’s just not fun. It’s similar to why English and math classes in school felt tedious — nobody told you what these subjects were actually for, just to memorize everything. Knowledge shines when applied in the right context. Knowledge crammed solely for exams has little lasting value.

So when I want to learn a particular technology, I first think about what I could build with it. If you think hard enough, at least one or two ideas always come up. Alternatively, you can start with something you want to build and study the necessary technology to make it. The key point is actually using the technology.

I personally prefer the latter approach. I decide what I want to build first, then study whatever knowledge is required. Sometimes that means analyzing research papers, sometimes it means pulling out a college textbook I barely touched, sometimes it means reading official documentation all night — but the desire to finish the project I’ve started keeps me studying to the end.

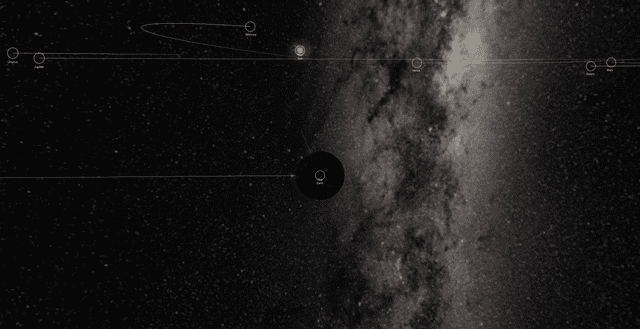

A solar system simulator I've been building since 2017

A solar system simulator I've been building since 2017

I’ve loved space since I was a kid, and from the moment I started coding, I wanted to simulate the movements of celestial bodies in our solar system. But building it required understanding computer graphics, mathematics, and astrophysics — which felt so daunting that I didn’t touch it for years.

Then one day, a few years into my career, I realized that at this rate, I’d never start the project. So I just dove in — studying Keplerian orbital equations and linear algebra — and eventually completed the project I’d been dreaming about for so long.

It was incredibly difficult. I’m not a huge fan of math, so I wasn’t even sure I could build something that was essentially wall-to-wall mathematics. I thought it would take about a year to study and build the basic framework. A year? I finished it in three months.

That doesn’t mean I fully understood Keplerian orbital equations in three months. I understood them just enough to build a solar system simulator. And since my goal was building the simulator — not mastering astrophysics — I didn’t need complete understanding.

I’m not particularly smart. I just studied consistently, cutting into my sleep every night. When equations didn’t make sense, I’d port them to code and step through them line by line. And this kind of grinding study is something anyone can do when they have a goal they truly want to achieve. (A truly smart person would have been buying Bitcoin instead of building a solar system simulator.)

I get destroyed in interviews and exams too, just like the rest of you.

I get destroyed in interviews and exams too, just like the rest of you.

At the end of the day, studying is about acquiring knowledge you need to build something. Studying without any goal is no different from cramming for standardized tests in high school. We need goals that are more fundamental than just “do well on the exam.”

By giving yourself a goal, you create strong motivation — and that motivation becomes the driving force for sustained, persistent learning. Before jumping into studying, first figure out why you need to study this in the first place.

Study With Your Own Convictions

I just talked about the importance of having a clear goal. The “decide what you want to build” approach I described is one way to create necessity — even for knowledge you don’t immediately need — and use that necessity as motivation.

But that method is stronger at preventing you from quitting midway. We also need to think about what knowledge is truly necessary for us. The definition of “necessary,” however, differs from person to person.

For me, the priority is strictly “knowledge I need to build something.” I don’t care how trendy a technology is or if it has 90% market share — if it doesn’t interest me, I don’t study it. If I later join a company that needs that skill, I’ll learn it then.

Looking at the projects I’ve built, it’s obvious — as a frontend developer, the chances of using knowledge gained from building a solar system simulator or audio effects processor are pretty low. So when interviewers ask “why did you build this?”, there’s no grand reason. I just say “personal satisfaction.”

Since what counts as “necessary knowledge” varies by person, I can’t tell you what you should or shouldn’t study. But what I do want to say is that you need clear personal convictions when it comes to learning.

To illustrate what I mean by personal convictions, consider people studying Flutter, Google’s cross-platform framework. They’re probably not studying it just because it’s trending. Flutter wasn’t even that well-known at the time, and its language, Dart, has limited use outside Flutter itself.

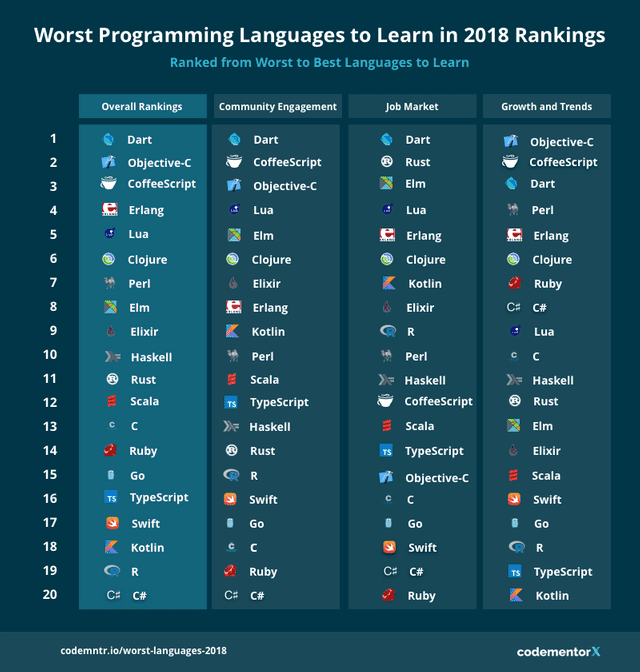

Codementor's 2018 survey of languages not recommended for learning.

Codementor's 2018 survey of languages not recommended for learning.Dart proudly topped all three categories. (It improved a lot by 2019, though.)

So why study it? People probably had various reasons — it looked fun, it seemed interesting, they wanted to test it out. Since it wasn’t widely adopted and hadn’t been thoroughly vetted for performance or bugs, it wasn’t easy to use at work either. I think personal curiosity played a huge role.

People with strong personal convictions aren’t easily swayed by trends. They keep seeking out and studying what they need and what they’re interested in. If someone like this feels their networking knowledge is lacking, they’ll identify exactly what’s missing and study that — they won’t just jump on Kubernetes because it’s the hot thing.

But finding the answer to “what am I lacking right now?” is quite a difficult process. It requires constantly questioning yourself, comparing yourself with others, and thinking about what path you want to take. If you haven’t given this deep thought before, it’s worth starting now.

Wrapping Up

If you came here expecting study tips like a cram school prep course, I’m sorry — I can’t teach you how to study well. There’s no royal road to learning. You just get better by doing it consistently. What I wanted to share in this post was how to study a little more efficiently.

The two points I made above can be summarized like this:

- Know why you’re studying something before you start.

- Just because everyone’s using React doesn’t mean you have to use React too.

Point 1 is about motivation, which I emphasized repeatedly. Whether it’s studying or anything else, if you’re not properly motivated, the work becomes joyless and mechanical. There are limits to sheer effort alone.

Point 2 might be more controversial. Here’s my take: if everyone’s using React and you study it too, getting a job might be easier. But most technologies — especially frameworks — are products of their time, reflecting the paradigms and constraints of the moment. Who can say that something better and more revolutionary than React won’t appear in 2-3 years?

The “React or Vue?” questions you see in every community come from people trying to figure out which choice maximizes return on investment. Just pick whichever one has a name or logo you like and start studying. In three years, you might have to ditch both and learn something entirely new anyway.

What matters is “what do I need right now?” — not blindly following a technology just because it’s popular or because people say it’s good. Of course, “lots of people use it” can be a valid reason. What I’m cautioning against is the kind of decision where you’re just following the crowd without your own reasoning.

If someone says a framework is good and that makes you want to study it, you should at least evaluate for yourself what specifically makes it good — and whether it actually is. There’s a reason interviewers ask “why did you study this?” and “what did you find good and bad about it?” when you bring up a technology.

As I mentioned at the beginning, this post is absolutely not the definitive answer. I found a study method that works for me, and yours might be the same or completely different. The most important thing is to keep asking yourself what excites you, when you focus best, and what truly motivates you. This is just my personal take, but I hope it helps anyone who’s struggling with where to start when studying development.

That wraps up this post on how developers survive through learning.

관련 포스팅 보러가기

How to Find Your Own Color – Setting a Direction for Growth

EssayCan I Really Say I Know Frontend?

EssayQuestion Driven Thinking — Learning by Asking Yourself Questions

EssayWhy I Share My Toy Project Experience

Essay/Soft SkillsMigrating from Hexo to Gatsby

Programming/Tutorials