[Deep Learning Series] What is Deep Learning?

History of Neural Networks and the Starting Point of Modern Deep Learning

![[Deep Learning Series] What is Deep Learning? [Deep Learning Series] What is Deep Learning?](/static/26718e1f085d210bba3e45681f40c1fa/2d839/thumbnail.jpg)

In this post, I want to write about what Deep Learning is and how it differs from traditional Neural Networks.

What is Artificial Neural Network?

Humanity has been trying to create thinking machines since the past. Various attempts were made in this process, and the method eventually devised was to program the human brain. This idea was possible because we came to understand the structure of the human brain to some extent in modern times, and also because this structure was simpler than expected.

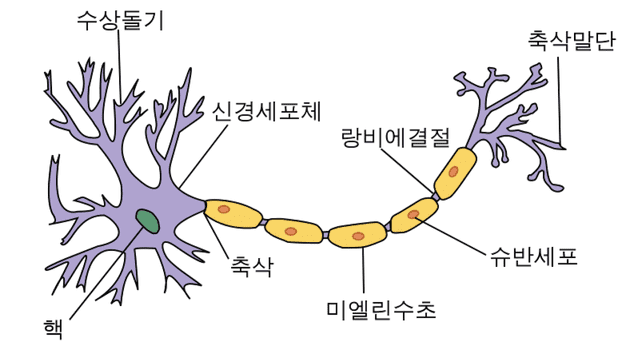

The human brain is an aggregate of cells called neurons. These neurons are simply cells that receive a signal, modulate it, and then transmit it again, and the brain is ultimately a structure where these neurons are connected like a network.

In conclusion, while the structure of the human brain is connected in an extremely complex way, the neurons themselves that form these connections had a surprisingly simple structure.

These neurons receive input signals at dendrites and transmit output signals through axons. At this time, for a signal to be transmitted to the next neuron, it must send a signal above a certain strength. Looking in more detail, it roughly follows this sequence:

- It receives signals from multiple synapses connected to the neuron. At this time, the signal can be represented as the product of the amount of chemical substance secreted () and the time it is secreted ().

- It combines multiple signals received from multiple synapses.

- A specific value () is added before transmitting to the next synapse.

- If this value exceeds a specific threshold, the signal is transmitted to the next synapse.

Wait...? It's simpler than expected...?

Wait...? It's simpler than expected...?

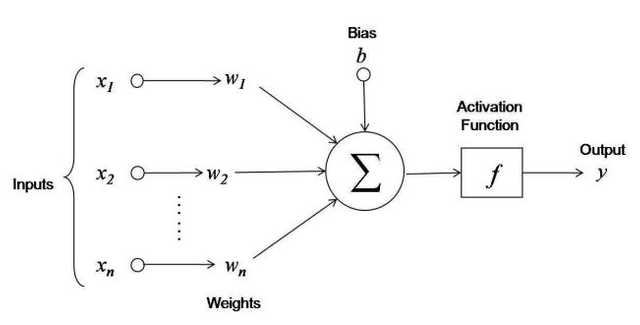

The human brain works on such simple principles… Then couldn’t we make this with machines? That’s where ANN (Artificial Neural Network) started from. This operation method of neurons can be diagrammed as follows.

This diagram can be expressed again as a formula like this:

At this time, this function is called the Activation Function, and this function returns 1 if the value inside the function exceeds a certain standard, otherwise it returns 0.

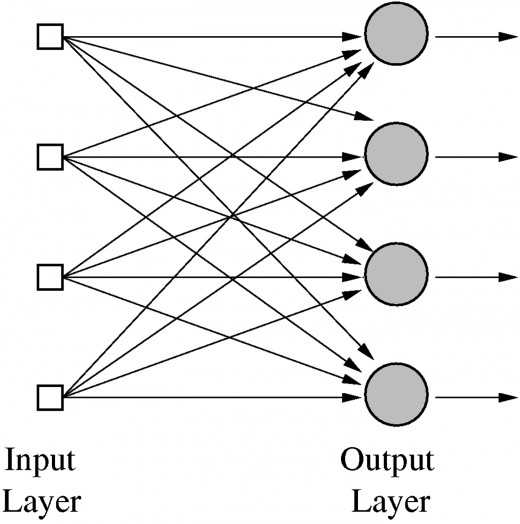

Thinking of this as one neuron, if we gather several of these neurons, it would roughly have a structure like below.

And machines in this form were already developed in the 1950s and could solve linear equations like AND or OR problems.

The Arrival of the Dark Age

Now one question arises here.

Wait, it’s 2018, 18 years into the 21st century, and if it was already developed to that point in the 1950s, shouldn’t robots be doing work for me now? And laundry too? Huh?

You might think this. First, let’s look again at AND and OR mentioned earlier.

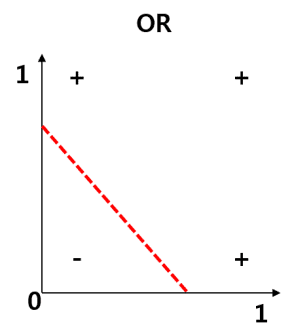

Since AND and OR are linear equations, solving such problems wasn’t very difficult even with machines using Single Layer Networks developed in the 1950s.

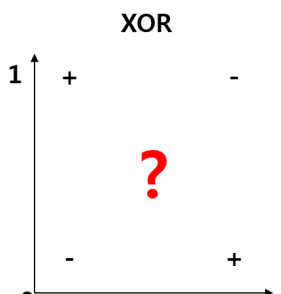

People who succeeded up to here thought “Awesome. Soon machines will be able to walk, run, and talk!” but the problem was the non-linear equation XOR.

No matter how you draw a line, there’s just no answer. XOR is a logical expression that is true when two inputs are not the same. It seemed very simple, but since XOR is not a linear equation, only 50% accuracy could be achieved with straight lines. People who reached this point became frustrated.

We need to use MLP, Multi Layer Perceptrons. No one on earth had found a viable way to train MLPs good enough to learn such simple functions.

Perceptrons(1969) Marvin Minsky

Eventually in 1969, Marvin Minsky mathematically proved that the XOR problem cannot be solved with a Single Layer Network. He said it’s possible with a Multi Layer Network but nobody can train it.

I wanted to know more about this reason, so I looked up passages from Perceptrons and found:

it ought to be possible to devise a training alhorithm to optimize the weights in thie using, say, the magnitude of a reinforcement signal to communicate to the net the cost of an error. We have not investigated this.

Perceptrons(1969) Marvin Minsky

Googling around, I found opinions that the book Perceptrons focused on explaining why XOR doesn’t train with a Single Layer Network and then wrote something like:

Well… we know training with a Multi Layer Network should work, but we don’t quite know how to do it yet.

Anyway, this book disappointed many people, and because of this, the entire field of Neural Networks entered a dark age.

Time to Rise Again

In 1974, Paul Werbos published an algorithm called “Backpropagation” in his doctoral dissertation.

But sadly, nobody paid attention, and even Marvin Minsky, the author of Perceptrons, didn’t show interest. He even republished the paper in 1982, but it was just buried again…

Then in 1986, Geoffrey Hinton independently rediscovered this algorithm and presented it, gaining attention. Anyway, because of this algorithm, the fact that training Multi Layer Networks is possible became known, and the field of Neural Networks became vibrant again.

The structure of the Backpropagation algorithm is simple. It’s literally propagating errors back (Back) from the side closest to the output (Propagation). That’s why it’s also called the “Backpropagation algorithm.”

I’ll cover this Backpropagation in the next post.

That’s all for this first Deep Learning post.

관련 포스팅 보러가기

[Deep Learning Series] Understanding Backpropagation

Programming/Machine LearningBuilding a Simple Artificial Neural Network with TypeScript

Programming/Machine LearningBasic Git for Newbies - Version Management

Programming/TutorialBasic Git for Newbies - Getting Started

Programming/TutorialSimply Applying HTTP/2 with AWS

Programming/Network