Who Are You, the One Writing Code Right Now?

In an Era Where AI Thinks for Us, What Are We Losing?

AI is transforming our lives. When you use the ChatGPT mobile app and have voice conversations back and forth, it’s hard not to feel like Jarvis from Iron Man has become reality.

Generative AI like ChatGPT is used for everything from casual conversation to complex problem-solving, making our lives richer and more convenient.

But I think we need to step back and ask whether the comfort these technologies afford us has made us forget an important question — one about the fundamental significance of human existence.

For thousands of years, philosophers have argued that what makes us human is our capacity for self-reflection, critical and rational thinking, self-awareness, and moral judgment.

Yet lately, I see more and more people delegating the very faculties we’ve long considered essential to being human to tools like ChatGPT.

Of course, generative AI still isn’t perfectly accurate, so we haven’t delegated everything just yet. But the fact that the delegation has already begun is what matters. Given the pace of AI’s advancement, it’s not hard to imagine a future where this delegation accelerates rapidly.

And yet, compared to the speed at which AI is advancing, the ethical and philosophical discourse around it has been largely sidelined. I think this absence of discourse will come back as a serious side effect once AI develops further, and those building this technology should be paying the most attention.

“I could have done better if I’d used ChatGPT”

Since 2016, I’ve interviewed countless developers. If I averaged four to six interviews per month, that comes to roughly 400–600 people over the years.

I’ve heard all kinds of answers and perspectives through these interviews, but one response I heard recently was something I’d never encountered before:

“I think I could have done better on the assignment if I’d been allowed to use ChatGPT.”

This struck me as a peculiar and unfamiliar answer. It was essentially an admission that without a specific tool, the person couldn’t fully solve the problem in front of them — whether that tool is ChatGPT or Google.

Typically in interviews, candidates avoid underselling themselves. They try to emphasize what they can do on their own merits, without external tools. Everyone understands that interviews are meant to find people who can solve problems without tools but can use tools to amplify their capabilities, not people who can’t solve problems without them.

Some of you reading this might argue: “We’ll use ChatGPT on the job anyway, so what’s wrong with using tools during the evaluation?” Why not just assess the ability to use these tools effectively?

I use ChatGPT at work too. And I won’t deny that using it well can dramatically boost productivity. But the topic I want to explore in this post isn’t something as narrow as “should we test ChatGPT proficiency in interviews.”

Think about it. Right now, since generative AI has only been around for two years, humans still need to craft good prompts to get good results. But soon enough, AI will reach the point where even a vague, poorly worded question produces a perfect answer.

If AI reaches that level, what does it even mean to “assess someone’s ability to use the tool”? If anyone, regardless of knowledge, can ask AI a sloppy question and get a perfect response, the very concept of tool proficiency becomes meaningless.

The real issue is this: despite generative AI being only two years old, the number of people depending on AI for their thinking is growing fast, yet hardly anyone is paying attention to ethical questions like “how should we approach AI?” or “what does it mean to outsource our thinking to AI?”

As I mentioned earlier, people currently know that generative AI isn’t perfect, so full delegation of thinking hasn’t happened yet. But the moment people start believing that AI produces better answers than they can, the degree of delegation will escalate rapidly.

This is fundamentally different from simply using a tool to increase productivity. Every tool up to now has either assisted human capabilities or enabled things humans couldn’t do on their own.

But AI can think and make decisions at a level nearly equal to humans, and in certain domains, it has already surpassed us. Unlike every tool that came before, it’s now possible to delegate not just labor but human thought itself to AI. And there’s almost no sign that people are wary of this possibility.

Given that we’re already delegating parts of our thinking to AI just two years after generative AI entered the mainstream, I believe this trend will only accelerate.

What is the fundamental significance of human existence?

To explain the concern I’ve been carrying, we first need to examine what has historically been defined as the reason humans can exist as humans. “What is a human being?” is a question philosophers have explored for thousands of years.

We know we possess something that distinguishes us from other animals, but because no one has given us a definitive answer, we’ve spent millennia searching for one ourselves.

Plato, through his Theory of Forms, said humans are beings who pursue truth and ideals. Aristotle defined humans as rational animals. Descartes, with his proposition “I think, therefore I am,” linked human existence to thought and self-awareness. Kant defined humans as autonomous agents capable of moral judgment. Sartre said humans are beings who create their own essence.

Countless philosophers have offered various definitions of human nature and the significance of existence, but if you look closely, there are a few common threads.

The most prominent commonality is self-reflection and self-awareness: the ability to understand oneself, analyze one’s thoughts and actions, and pursue a deep understanding of one’s own existence. Plato and Aristotle’s rational thinking, Descartes’ thought and self-awareness, Kant’s moral autonomy, Sartre’s creation of essence. All revolve around this core of self-reflection and self-awareness.

In other words, humans are not beings who merely experience and react. We are beings who analyze and evaluate our experiences, and from them discover new meaning and direction.

The essential human trait that philosophers have long emphasized, self-reflection and self-awareness, is what elevates us beyond mere biological existence. As long as there’s a possibility that we delegate this to AI, we need to examine the issue through deep contemplation.

Does AI threaten the essence of human existence?

Returning to the interviewee’s response, “I could have done better if I’d used ChatGPT”: I discussed the danger of humans increasingly delegating their thinking to AI. That answer literally means the person couldn’t adequately perform a task without AI assistance, illustrating how human cognitive ability is becoming dependent on AI.

The capacity to understand oneself, analyze one’s thoughts and actions, and pursue deep self-understanding — thought, self-reflection, and self-awareness. These define what it means to be human. These are what give humans their value beyond mere biological existence.

Based on this reasoning, I want to argue that we need to be vigilant about the possibility that AI threatens the essence of human existence.

As AI advances, we’re delegating more and more of our thinking to it. If relying on AI to solve problems becomes the norm, we’ll likely skip the process of thinking deeply on our own — which means losing opportunities for self-reflection, constraining our capacity for thought.

Going further, if AI increasingly makes our decisions for us, we risk losing responsibility and autonomy, key components of self-awareness. The more we depend on AI’s decisions, the less accountable we feel for the outcomes, which could weaken our capacity for moral judgment.

These questions about responsibility and moral judgment have long surfaced in everyday debates like “who’s responsible when a self-driving car causes an accident?” None of these questions have been conclusively answered.

We must stay alert to the fact that as AI advances and our dependence deepens, we risk losing the very capabilities that define us as human. And those who are leading this technology should be paying even more attention to this ethical discourse.

Who are you, the one writing code right now?

As you’ve probably guessed, the people leading this technology are developers and researchers — especially those contributing directly to the AI domain.

Yet lately, even among developers, I see cases where people accept AI-suggested code from ChatGPT or Copilot without any critical review.

ChatGPT and Copilot are advancing rapidly, and it’s true they produce higher-quality code than before. But can we honestly say their output is flawless at this point?

No, we can’t. AI-generated code sometimes contains errors, and in certain situations, it fails to provide a complete solution. In particular, understanding context across multiple modules and designing architecture remain problems AI hasn’t conquered.

If AI-generated code were already perfect, the profession of software developer would have ceased to exist. The fact that developers still exist is itself proof that AI’s code is not yet complete. As AI advances, the number of people in this profession may shrink, but for now, that’s not the case.

So what role should we — the people with expertise in this technology — play?

Developers shouldn’t simply accept AI’s output. We should critically review and revise what AI generates. We must view AI as a tool and use it to maximize our own thinking and creativity. Even with AI’s help, the final decisions and responsibility rest with us.

Knowing that hallucinations exist, copying and pasting AI-generated code without review, or treating AI’s responses as truth, is premature. Even if the day comes when AI produces perfect answers, we must never forget that AI should be controlled by experts as a tool. (AI is a library, not a framework.)

If you’re accepting AI’s output uncritically and mistaking it for your own ability, ask yourself:

Am I a human who uses AI as a tool? Or am I merely a vessel that transfers AI’s output into an IDE?

AI cannot become “me.” We can receive AI’s help, but in that process, we must remain vigilant about preserving our ability to think and judge for ourselves.

This question goes beyond programming. It demands reflection on the nature of being human. If what makes us fundamentally human is the ability to understand ourselves through self-reflection and self-awareness, to explore the meaning of our existence, then merely following the instructions of a non-human tool cannot constitute human existence.

Closing thoughts

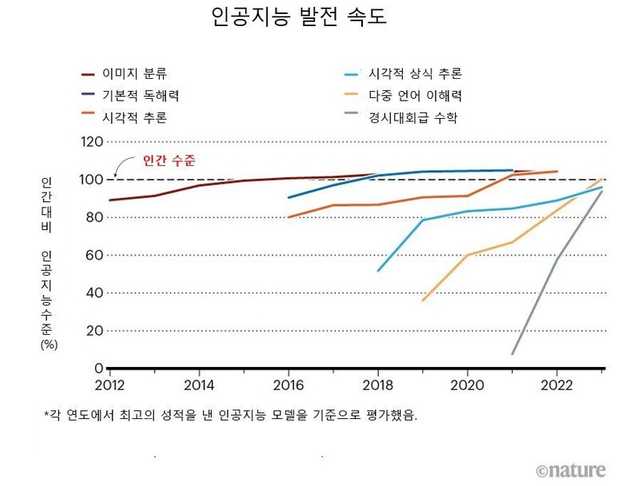

Some might say these thoughts are premature, or that I’m making too great a leap. But as you know, AI is advancing at a staggering pace, and in certain fields, AI has surpassed humans in less than a decade.

Math aside, look at the terrifying slope in language comprehension.

Math aside, look at the terrifying slope in language comprehension.

The number of AI-related projects on GitHub was only around 800 in 2011, but by 2023 it had grown to roughly 1.8 million. Over the same period, the number of research papers roughly tripled.

I believe that compared to how fast the technology is advancing, our perspective on it, our discussion of the potential side effects, has not kept pace.

AI is fundamentally different from every tool that came before. Previous tools could never surpass humans no matter what, and because of that inherent limitation, even the best tools could only assist.

But as the chart above shows, AI has already surpassed humans in certain areas. AGI may still be some time away, but in specific domains, it’s already happened. We need to pay attention to this.

What I want to say is this: as we use this convenient, explosively productive tool called AI, we should pause and ask whether we’re unknowingly delegating our essential human value to it.

A tool is called a tool because humans wield it with agency. If using AI causes us to lose our autonomy and the fundamental significance of being human, can we still call it a tool?

Building a better future alongside AI requires that ethical and philosophical discourse keep pace with technological development. Those of us leading this technology shouldn’t be focused solely on advancing the technology itself. We need to actively participate in these discussions and care about building a world where we, as humans, can properly wield AI as the powerful tool it is.

This concludes my post: Who Are You, the One Writing Code Right Now?